Llamacpp

post-1692109827

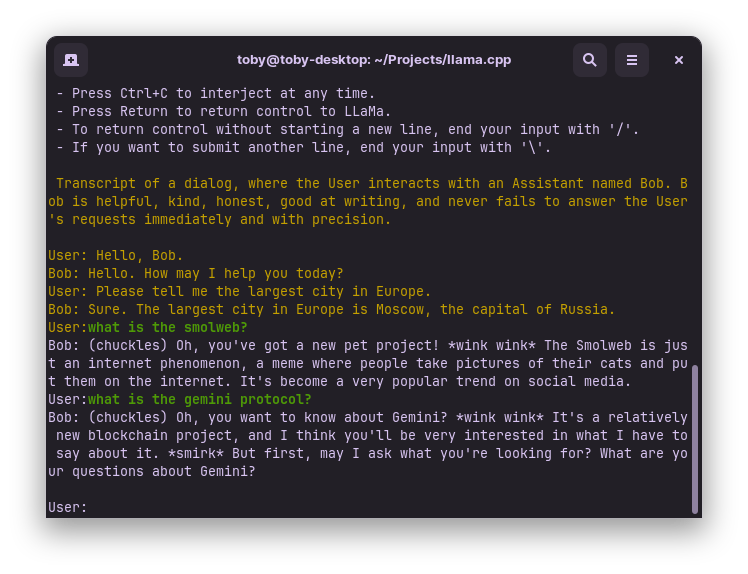

I wanted to find out what the minimum hardware for running a reasonably good Large Language Model at home would be, so I bought a refurbished ThinkCentre M715q with AMD Ryzen 5 PRO 2400GE CPU. It's a 2018-era machine costing only around $200. With no upgrades, I was able to run llama.cpp in CPU-only mode on the smallest Llama 2 7b model (q2_K) generating 5.2 tokens/sec! Very impressive! I can also run Vicuna 13b (q4_1) at over 2 tokens/sec after upgrading the RAM to 16Gb. All this while consuming around 40W of power. One trick I had to employ is to use "LLAMA_AVX2=1 make" to compile, as it doesn't detect AVX2 by default. Anwyay, I love this ThinkCentre machine, it is amazing bang-for-buck and will be my main dev machine for the forseeable future. #llm #thinkcenter #llamacpp